Functional & Molecular Emission Computed Tomography

Functional & Molecular Emission Computed Tomography

Our research group is set up to improve and enhance the diagnostic value of emission tomographic imaging systems as specifically related to cancer. These include the nuclear medicine imaging modalities positron emission tomography (PET) and single photon emission computed tomography (SPECT) both for clinical and for preclinical applications. With a focus on preclinical imaging in small animals these also include the optical modalities bioluminescence imaging (BLI) as well as fluorescence mediated imaging (FMI) and fluorescence diffusion optical tomography (DOT).

Research Topics

- Multimodal emission imaging systems involving FMT, BLI, CT, SPECT

- Synchromodal emission imaging systems involving FMT, BLI, MRI, PET

- Simulation tools for multimodal imaging systems involving keV and eV photons

- Monolithic PET detector units for pre-clinical and whole-body PET

- Plenoptic imaging systems for in vivo FMT, BLI, DOT

- Anthropomorphic and zoomorphic phantoms with integrated tumor tissues

Our group is a member of the Crystal Clear Collaboration.

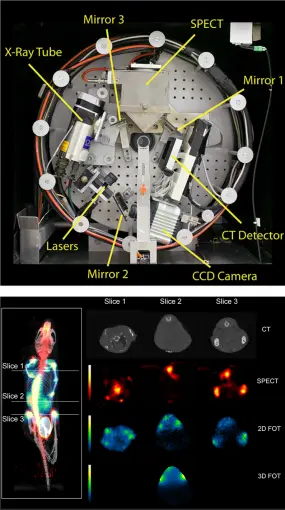

Some of our projects are envisioned and put into action in a truly multimodal instrumentation context. This includes research on (large-field-of-view, LFOV) PET-CT and PET-MRI (clinical systems) as well as optical imaging combined with any other modality. The world's first triple modality SPECT-FMI/BLI-CT mouse imager (left figure, top:front view of the trimodality imaging system, bottom: fused MIP view of reconstructed CT, SPECT, and 2-D FMT data (reference)) was build in this research group.

The objective of this instrument was intended for simultaneous detection of radiolabeled pharmaceutical distributions (SPECT), near-infrared fluorescent molecular markers (fluorescence mediated imaging and tomography (FMI/FMT)) and/or bioluminescence imaging (BLI) and high-resolution x-ray tomography (CT) with axially un-shifted (i.e. identical), spatially over-lapping field-of-views (FOV) of all involved sub-modalities. For SPECT imaging a compact gamma detector (Thomas Jefferson National Accelerator Facility, USA) is implemented. It consists of a 2x2 array of Hamamatsu H8500 position sensitive photomultiplier tubes which are attached to a 66x66 array of opto-decoupled 1.3x1.3x6 mm3 NaI(Tl) crystal elements (St. Gobain) yielding a total detector area of 10x10 cm2. Various collimators (pinhole, fan beam, parallel beam) can be attached to the camera for specific imaging purposes. A high resolution ORCA AG cooled CCD camera (Hamamatsu) is used for the optical detector sub-system, containing a progressive scan interline CCD chip with a 1344x1024 pixel array and 12 bit digital output. Various laser sources, selected by wavelength and light power requirements, can be mounted on the gantry. The x-ray CT sub-system employs a Series 5000 Apogee x-ray tube (Oxford Instruments) with a maximum power of 50W, at 4 to 50kV, 0 to 1mA. The focal spot size is 35µm and the cone angle is 24 degrees. The x-ray detector is a Shad-o-Box 2048 (Rad-icon Imaging Corp.) containing a 50x100 mm2 Gd2O2S scintillator screen that is placed in direct contact with a CMOS photodiode array with 48µm sensor pixel size. The integration concept is laid out in a fully modular manner whereby all components are mounted on a common gantry system such that a wide range of applications can be performed. Such design yields highest possible sub- and/or multi-modality performance. The entire assembly is enclosed in a light-tight and gamma-ray shielded compartment which is mounted on a movable trolley containing another compartment holding all necessary camera control, data read-out, laser light, gantry and linear stage motion control electronics as well as high-voltage and power supply, and workstation computers. Unified simultaneous data acquisition, image reconstruction, and fused planar and tomographic image display is possible using our tri-modal imager thus providing intrinsically registered potentially three-dimensional fluorescence and radiopharmaca distribution maps carrying molecular and functional information that is correlated to the anatomy of the imaged object provided by x-ray.

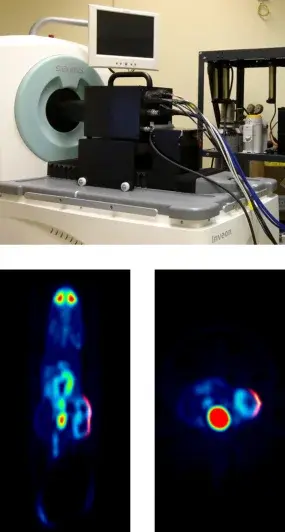

To enable what we call synchromodal optical imaging we invented the first plenoptic camera for diagnostic research that not only creates three-dimensional (illumination and fluence) projections of the imaged subject, but can also be integrated and used with PET and MRI (reference). The world's first simultaneously acquired [18F]FDG PET - pVEGF Luc BLI plenoptic image is shown in the left figure (top: experimental plenoptic system integrated into a Siemens Inveon PET for simultaneous data acquisition, bottom: fused PET and OI data (reference)).

A complete instrumentation and mathematical framework for preclinical plenoptic imaging (POI) is developed in which optical data is acquired by means of a microlens array (MLA) based light detector (MLA-D). The MLA-D has been developed to enable unique POI, especially in synchromodal operation with secondary imaging modalities such as PET or MRI. An MLA-D consists of a (large-area) photon sensor array, a matched MLA for field-of-view definition, and a septum mask of specific geometry made of anodized aluminum that is positioned between the sensor and the MLA to suppresses light cross-talk and to shield the sensor's radiofrequency interference signal (essential when used inside an MRI system). The software framework, while freely parameterizable for any MLA-D, is tailored towards a POI prototype system for preclinical synchromodal imaging application comprising a multitude of cylindrically assembled, gantry-mounted, simultaneously operating MLA-D's. When used in synchromodal operation, reconstructed tomographic volume data can be used for co-modal image fusion and also as a prior for estimating the imaged object's 3D surface by means of gradient vector flow. Superimposed planar or surface-aligned inverse mapping can be performed to estimate and to fuse the emission light map with the boundary of the imaged object. Triangulation and subsequent optical reconstruction can be performed to estimate the internal three-dimensional emission light distribution. The framework is susceptible to a number of variables controlling convergence and computational speed.

One of our highlight topics is the Monte Carlo simulation of emission imaging, that is eV and keV photon propagation in heterogeneous phantoms and diverse scanner and detector geometries, all of which within a unique, easy to use multimodal framework, Musiré, that enables not only the use of 3D meshs and/or voxelized data across all involved modalities with seamless transformation and adaption between them but also, for the first time, the integration of tumor lesions as defined by (tumor) cell clouds that are the results of biological cancer simulations.

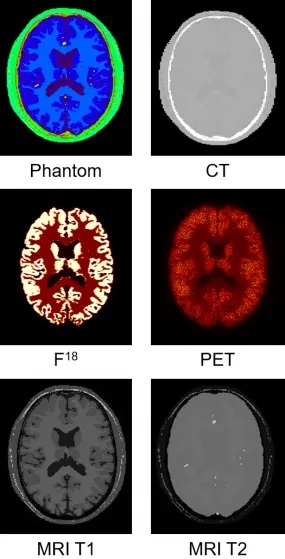

With Musiré, a software-based workflow is proposed for managing the execution of simulation and image reconstruction for SPECT, PET, CBCT, MRI, BLI and FMI packages in single and multimodal biomedical imaging applications. The workflow is composed of a Bash script, the purpose of which is to provide an interface to the user, and to organize data flow between dedicated programs for simulation and reconstruction. The currently incorporated simulation programs comprise GATE for Monte Carlo simulation of SPECT, PET and CBCT, SpinScenario for simulating MRI, and Lipros for Monte Carlo simulation of BLI and FMI. Currently incorporated image reconstruction programs include CASToR for SPECT and PET as well as RTK for CBCT.

MetaImage (mhd) standard is used for voxelized phantom and image data format. Meshlab project (mlp) containers incorporating polygon meshes and point clouds defined by the Stanford triangle format (ply) are employed to represent anatomical structures for optical simulation, and to represent tumour cell inserts. A number of auxiliary programs have been developed for data transformation and adaptive parameter assignment. The software workflow uses fully automatic distribution to, and consolidation from, any number of Linux workstations and CPU cores.

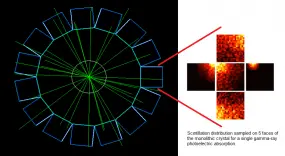

This project seeks to contribute to the advancement of PET instrumentation and the development of novel multimodal techniques. From preclinical systems to whole-body clinical systems a focus is drawn on investigating monolithic-detector design primarily through the use of computational simulations and with the support of basic experimental tests. The simulation approach includes the full simulation of scintillation photons within the crystals and therefore constitutes a powerful tool being used to optimise the co-dependent scanner geometrical design and the applied gamma-ray point-of-interaction determination algorithm. A prototype for a multimodal preclinical scanner integrating PET imaging with optical tomography is currently being developed using said tools.

Tomographic system design

Non-contact in vivo optical imaging systems purposely designed for bioluminescence and fluorescence molecular imaging (BLI, FMI) in small animals employing lens based cameras have become standard in preclinical laboratories. Such instruments comprise planar imaging systems that acquire two-dimensional (2D) images of a three-dimensional (3D) in vivo emission flux. When performing planar light emission imaging on complex surface geometries local emission ray intensities as well as spatial distribution thereof of the induced emission flux vary depending on alterations of camera position and angle with respect to the animal's surface. While respective measurement fluctuations could be minimized by detecting light rays orthogonally to surface points, such is impossible to achieve with a singular whole-body 2D light projection.

To address the problem of measuring in vivo emission light flux exiting the hull of a 3D object while preserving and estimating its a priori unknown 3D boundary space we report on the development and first simulation results of a plenoptic imaging system purposely designed for in vivo BLI and FMI application. Due to a multitude of arbitrarily positionable integrated laser diode light sources imposing point as well as bright-field illumination patterns, the system is able to perform multispectral fluorescence mediated tomography (FMT) and diffision optical tomography (DOT) without the exigency of a complementary imaging procedure for surface detection.

A plenoptic imaging system for preclinical in vivo imaging application has been developed (figure left). When imaging a three-dimensional (3D) object from six plenoptic camera positions, corresponding raw plenoptic data under bright-field illumination are shown. Acquisition setup and data used from a simulation study: Imaging system consisting of 6 plenoptic cameras with sensitive areas of 25mm× 50 mm, each, with nearly orthographic projections; there are (retractable) diffuse fiber line sources of detector length for object illumination located in the intermediary spaces between light detectors (top). The segmented volume of a reconstructed x-ray CT mouse data set is used as identified input (middle). Resulting raw plenoptic camera data as detected from 6 projection angles around 360° (bottom).

Computational mathematics

There are multiple imaging strategies involved in computational imaging. Here, surface reconstruction from multiview projection plenoptic image data is described (reference). The technique is adapted for in vivo small animal imaging, specifically imaging of nude mouse, and does not require an additional imaging step (e.g. be means of a secondary structural modality) or additional hardware (e.g. laser scanning approaches). Any potential point within the field-of-view (FOV) is evaluated by a proposed photo-consistency measure utilizing sensor image light information as provided by elemental images (EI's). As the superposition of adjacent EI's yields complementary information for any point within the FOV the three dimensional (3D) surface of the imaged object is estimated by a graph-cuts based method through global energy minimization. The proposed surface reconstruction is evaluated on simulated MLA-D data incorporating a reconstructed mouse data volume as acquired by X-ray CT. Compared to a previously presented back-projection based surface reconstruction method the proposed technique yields a significantly lower error rate. Moreover while the back-projection based method may not be able to resolve concave areas, the novel approach does. Our results further indicate that the proposed method achieves high accuracy at a low number of projections.

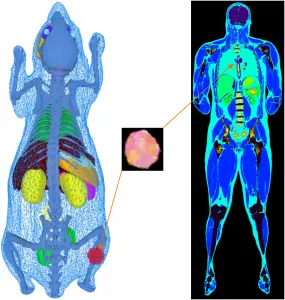

In our simulations packages such as Musiré (see also Simulation tools for multimodal imaging), a single object description (phantom, atlas) can be used for the simulation of all imaging modalities. Whereas voxelized phantoms are generally employed for SPECT, PET, CT and MRI simulations, mesh-based representation of anatomical structures is preferred for the simulation of optical photons (BLI, FMI) since the imaged object's boundary contributes crucial information on the emitted photon flux which constitutes a decisive factor for image reconstruction or inverse light field mapping accuracy.

Our phantoms include (i) the three-dimensional (x, y, z) voxelized tissue/material atlas encoded as MetaIO (mhd) and (ii) the three-dimensional polygonal (triangle) mesh for tissue atlas geometry representing general anatomy (and objects) encoded in the Stanford triangle format (ply). Our programs are also compatible with three-dimensional point clouds encoded as ply. A multitude of meshes and point clouds can be encapsulated within a MeshLab project (mlp) container. From these, our frameworks automatically convert the object description into the appropriate format for every simulator. The mechanism of passing parameter files or scripts also allows for use of simulator-specific phantom representation models. Physical attributes such as the definition of materials, densities, source activities, dye concentrations, relaxation times and others are assigned to mhd or ply represented atlas regions by simple look-up table (dat) files. Point clouds are intended for representing (multi-lesion) heterogeneous tumour cell distributions such as generated by biologically derived models for tumour growth dynamics. Anatomical phantoms can be extended with arbitrarily placeable tumour inserts. These inserts can represent arbitrarily solid, multi-lesion, necrotic or heterogeneous entities with millions of cells per cubic centimetre of tissue. Depending on the simulated imaging modality, point clouds can be used at their native resolution. This is implemented for optical imaging where bioluminescence light distribution is sampled directly from the spatial tumour cell distribution. However, considering the size of tumour cells to be of the order of 10 μm to 50 μm it would be inefficient to use this degree of spatial resolution, e.g. in nuclear imaging simulation. Hence, point cloud tumour inserts are being down-sampled automatically for other modalities into the spatial resolution of the host phantom. By employing specific command-line options tumour cell density per voxel can be scaled into a source activity range or further physical material entities, such as T1 and T2 relaxation times.

Contact

-

Dr. Jörg Peter

Group leader